According to a French saying, it is wise to turn your tongue seven times in your mouth before you speak.

The publication of a recent blog post (https://blog.ceetron.com/2015/05/05/big-3d-in-the-cloud/) suggests I still haven’t learned this wisdom. A colleague argued in that post that the typical size of today’s engineering simulation unstructured mesh topologies should be counted in the tens of millions of nodes. My friend also forecasted that within a decade these meshes would possibly contain one or two orders of magnitude more.

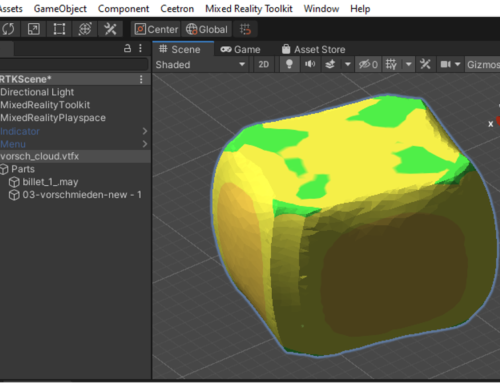

Coming from a material-forming simulation background, I wondered at these figures. In my world, where these specific transient simulations are common, the size of the current models used rarely exceeds 1 million nodes. A 100 000-node model can be considered typical, and this figure has not seen the exponential evolution that is assumed in the blog – in twenty years there has been an increase of only one order of magnitude.

I was challenged to expand on this subject and write this blog post in the form of a technology prediction of typical sizes for engineering models – whether FEA or CFD – in 2020 and in 2025. The scope of the models is limited to those used for optimization purposes, with a need for interactivity, result inspection, input modification and simulation rerun. This is in contrast to compute-intensive simulation domains such as topology optimization, or to traditional scientific HPC such as climate prediction calculations. According to industry sources, a large topology optimization simulation today would include models of 2-4 M finite elements while a large airflow analysis in the automotive industry would include models of up to 400 M cells.

Of course, technology requirements dictate what model-sizes can be addressed. Were we to rely on their own evolution to cast a prediction of future model sizes, some sort of Moore’s law is probably a proper bet. But things are not that clear cut.

First, the bijective relation between hardware resources and model size assumes that all the additional power provided by the hardware is used only to increase model sizes, i.e., to increase result precision. This is not always the case. There is at least one exception I’ve observed in the material forming simulation field. Furthermore, even if the choice is to use larger models, in order to take full advantage of new hardware resources, the numerical methods used to solve the physical equations governing the simulated phenomena must be adapted to the architectures of the machines on which these computations are performed.

The need for adapted numerical methods and the questionable economic efficiency of choosing to blindly upgrade model sizes are elements that give some grounds to my hasty remarks on soaring model sizes. At least, ten-fold increases every five years are figures that need to be tempered. And this time, my tongue has been turned in my mouth the appropriate number of times.

What are the options when new, more efficient hardware becomes available? In other words, how do engineering application users derive benefit from faster CPU’s and more memory? This is both an engineering and an economic choice. The simulation must provide results that are precise enough to answer the questions engineers are addressing. Its results must be delivered on time: spot-on precision doesn’t help if the tender deadline has passed. Finally, other uses can be made of the added power, e.g., compute lower yet acceptable precision models multiple times within an automated optimization procedure.

The case for choosing larger models over faster computations is thus intimately linked to the desired level of precision. In a word, if the results are not precise enough, there is no other way forward but to increase the model size, and accept the duration penalty (as long as it is not a show-stopper). This could be the case if the study aims at phenomena that take place at smaller spatial scales than the current models can cope with: microstructural analysis, DNS for turbulent flow, etc. It could also be true if the domain of the computation itself is extended, for example to include interactions that were previously neglected.

Additional computation power is sometimes the result of new hardware architectures. We have seen these breakthroughs on several occasions over the years: single processor to multiple processors providing distributed memory parallel machines; the upgrade from 32-bit to 64-bit operating systems; the multicore processors and their underlying shared memory architecture; very efficient GPU’s made accessible to non-graphic applications; and more recently, cloud computing on remote machines. All these changes brought with them more computational power. Yet for FEA and CFD software packages to take full advantage of them, it took sometimes years to develop and stabilize new efficient numerical methods to capitalize on the new hardware. And even then, the new hardware power does not always translate linearly into faster solvers or larger models. The example of parallel computing illustrates this quite convincingly through Amdahl’s law: there is a limit to how much parallelization speeds up a computation.

Well, I think I made my point. Very large models such as those mentioned by my colleagues do indeed exist, and I would not question that we will be seeing much larger ones in the future. But the task of predicting what a typical model size will be in 5 or 10 years is simply the wrong question. The idea of the ‘typical model’ is a chimaera, given that average model sizes can vary so widely from one use case to another. In mature markets, such as the simulation of forging, injection molding, or casting, larger models will only arise from a demand for finer physics — and hence more nodes. In other words, the resources provided by new hardware are being used directly to perform more computations, which delivers better solutions within the existing model precision. In markets were physical modeling still compensates for the lack of computational power — as turbulence models do to avoid direct numerical simulation — it seems obvious that the choice will be to increase model sizes until the computational power for such direct simulation is both available and cost-effective.

Having discussed a draft of the above blog with some of my colleagues, I believe I or one of my colleagues will revert to the model size issue in the near future, equipped with more facts and with external perspectives by key players in the industry. Stay tuned.

Andres

Leave A Comment

You must be logged in to post a comment.